⚡💼 37% more productive in your job thanks to AI

A brief timeline of recent years in artificial intelligence:

1957 - Perceptron Mark I: the first implementation of a neural network. Its most sophisticated version could distinguish photos of men and women (more or less).

1965 - The first chatbot “Eliza” was created as a parody, but everyone felt like they were in the future. (You can try it here).

1979 - Neocognitron: a precursor to the visual recognition systems we have today. It was capable of recognizing handwriting.

1986 - NavLab1: the first autonomous car. It's worth watching this video where it humorously moves at 1 km/hr.

1987 - NetTalk: the first system that could reproduce words from text. It was quite robotic, but it worked.

1997 - Deep Blue defeats a world chess champion (Garry Kasparov) for the first time. Contrary to what one might think, Kasparov became a global spokesperson for the adoption of AI.

2011 - IBM Watson wins on the American television show “Jeopardy”! Siri is also launched on the iPhone, the first mass-market voice assistant.

2016 - Google’s AlphaGo defeats the world champion of Go, forcing him to retire permanently. I recommend watching the Netflix documentary.

2017 - A pivotal moment. Here, the pace begins to accelerate with something that goes unnoticed by many: Google releases the paper “Attention is all you need”. The paper proposes the “Transformer” architecture, which opens the door to the large generative models we know today, like ChatGPT (the “T” in GPT stands for “Transformer”).

May 2020 - OpenAI launches GPT-3. Developers, quietly, begin to test the API and are amazed by the results.

January 2021 - As if that weren't enough, OpenAI launches another generative tool. This time for images: Dall-E. It starts to become more mainstream to talk about AI generators.

October 2021 - GitHub launches its programmer assistant “Copilot” and programmers are divided into two groups: those who fear for their jobs and those who become 10x more productive.

July 2022 - Dall-E faces competition with the launch of Midjourney, now considered one of the best AI image generators.

November 2022 - With 100 million users in its first two months, the fastest adoption technology in human history is launched: ChatGPT.

February 2023 - What no one expected: people start talking about Bing again. Microsoft begins to capitalize on the investment it made in OpenAI four years ago and starts implementing its AI in its products. The first: Bing and Edge.

March 2023 - A battle of giants. Microsoft and Google announce the implementation of AI generators in their Office and Workspace suites, respectively. Microsoft takes the lead, with a failed launch by Google of its ChatGPT competitor, Bard.

March 2023 - Just days later, we receive another gift: GPT-4. Even though the model has been ready since October 2022, OpenAI takes its time to “align” its responses and ensure that GPT-4 doesn’t talk about the benefits of eating glass.

Across Twitter and LinkedIn, people are trying to predict how this technology will change the way we work. Fortunately, we no longer need to speculate. We have some scientific literature on the subject. In particular, two papers catch my attention.

The first recruited a group of 95 programmers through the freelance platform Upwork, randomized them into two groups (control and treatment), and gave them the same project. The control group had to do it as they always have, while the treatment group was given access to the intelligent programming assistant GitHub Copilot.

An example of how Copilot can help write code. You tell it you want a function that sends a tweet using the Twitter API, and it does the work for you.

The treatment group took 55% less time than the control group.

Let me reiterate: 55% less.

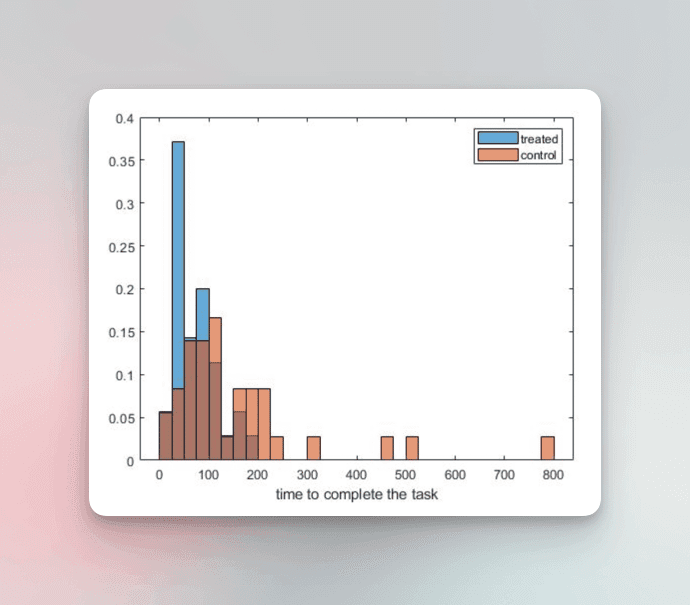

Distribution of time taken by each group to complete the project in minutes. The important thing: the blue bars (average: 71 min) are further to the left than the orange ones (average: 160 min).

Imagine if everyone improved their productivity by 55% today. And the most surprising part? GitHub just launched Copilot X, its improved version. My prediction is that the time reduction will continue to grow, and the sophistication of the work will keep improving.

These productivity improvements are not exclusive to the programming world.

Another paper conducted a similar experiment. It recruited 444 specialists in marketing, consulting, data analysis, and management, and assigned them a task related to their daily work, such as writing press releases, reports, analyses, or detailed emails. Again, the group was randomly divided, and the treatment group was given access to ChatGPT. The time taken to complete the tasks was recorded, and the results were evaluated by several experts in each field.

The group with access to AI was 37% faster in completing the task.

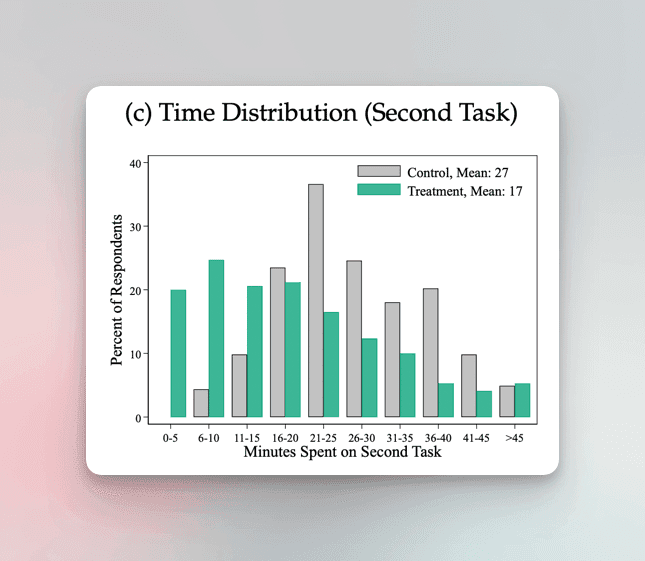

What stands out to me in this graph is that in the control group (gray), no one finished in less than 5 minutes, and very few between 6 and 10 minutes, in contrast to several people in the treatment group. Probably, many of them just asked the AI, “write an email saying X” and copied and pasted the result.

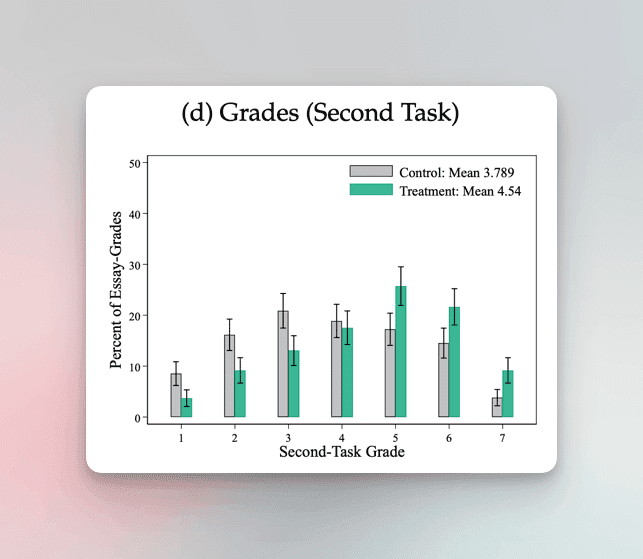

Not only that. They were also rated better by experts in their work.

The individuals in the treatment group were more effective and still achieved better results.

What reflections do I take from all this?

Even if advancements in AI were to stop today, they have already been sufficient to change the way we work and learn. Many knowledge workers will find enormous benefits in using this type of technology, as well as significant risks if they fail to get on board. The way we teach and learn will also need to adapt.

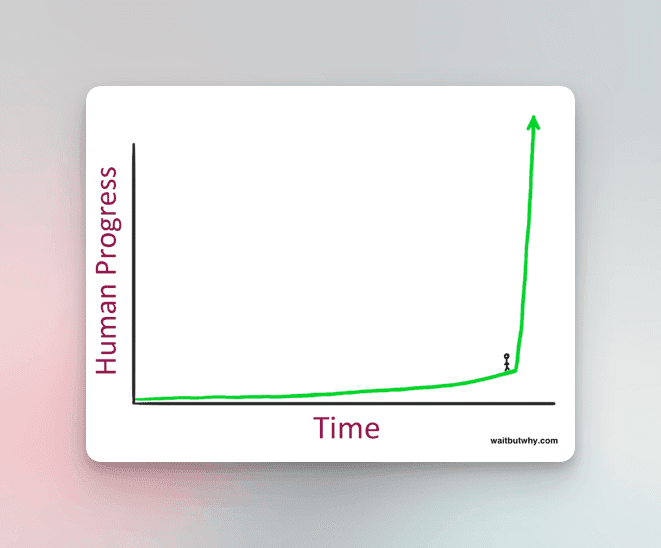

The pace of change will continue to accelerate. One thing I learned while creating the timeline above is that there have been more advancements in AI in the last 12 months than in the previous 40 years. At times, I feel like this:

Even if advancements in AI were to stop today, they have already been sufficient to change the way we work and learn. Many knowledge workers will find enormous benefits in using this type of technology, as well as significant risks if they fail to get on board. The way we teach and learn will also need to adapt.

The pace of change will continue to accelerate. One thing I learned while creating the timeline above is that there have been more advancements in AI in the last 12 months than in the previous 40 years. At times, I feel like this:

On the brink of a trajectory change. Image borrowed from this excellent article that discusses the basics of artificial intelligence and the “singularity”.