⏰🚀 Racing Against Time: AI Surpasses Predictions.

In January 2021, Michael Wooldridge, PhD in artificial intelligence and professor of computer science at the University of Oxford, published this book:

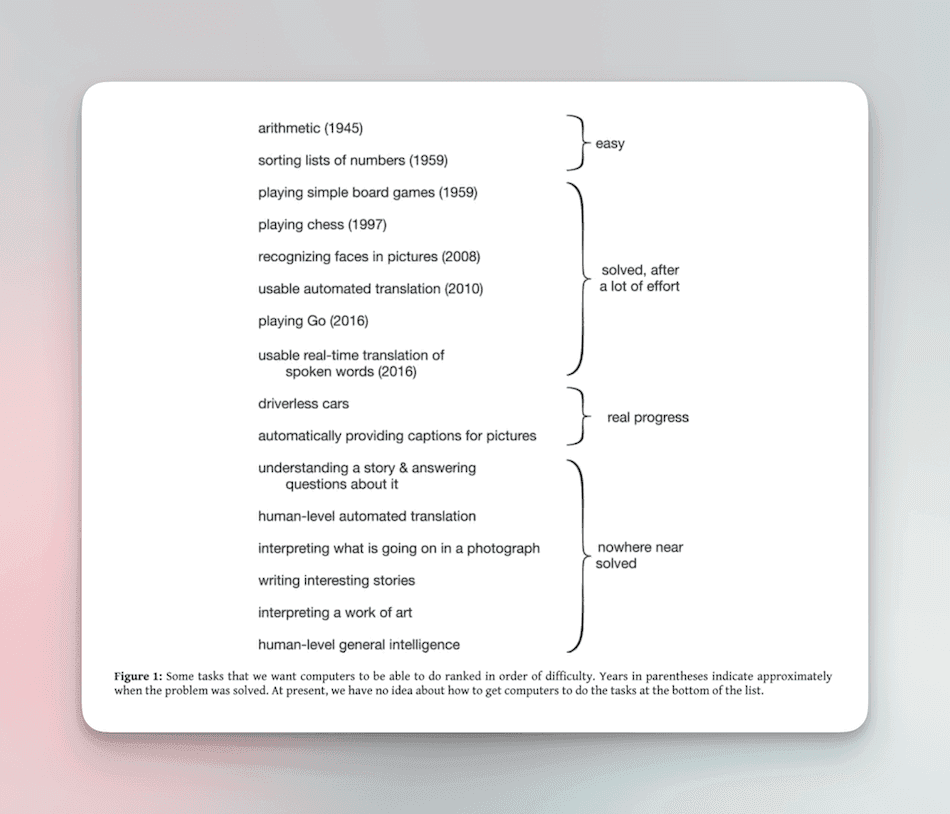

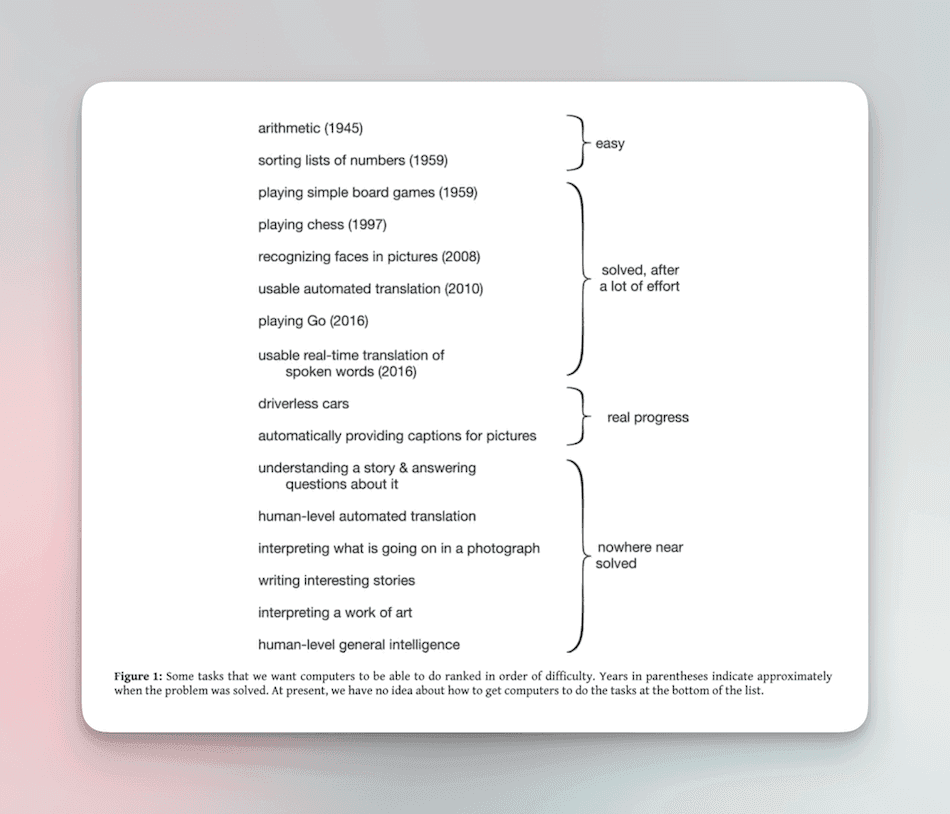

On page 10, one can find a list of tasks that AI has solved, accompanied by the approximate year when researchers managed to get AI to perform that task.

The tasks range from easy ones, such as basic arithmetic, which was solved in 1945, to slightly more complex tasks, like playing chess or GO, to tasks that are almost being solved today, like autonomous cars.

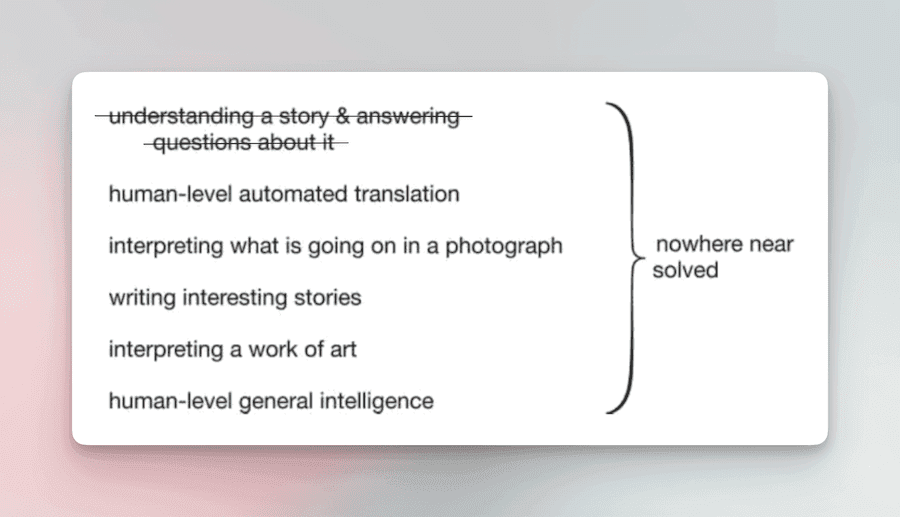

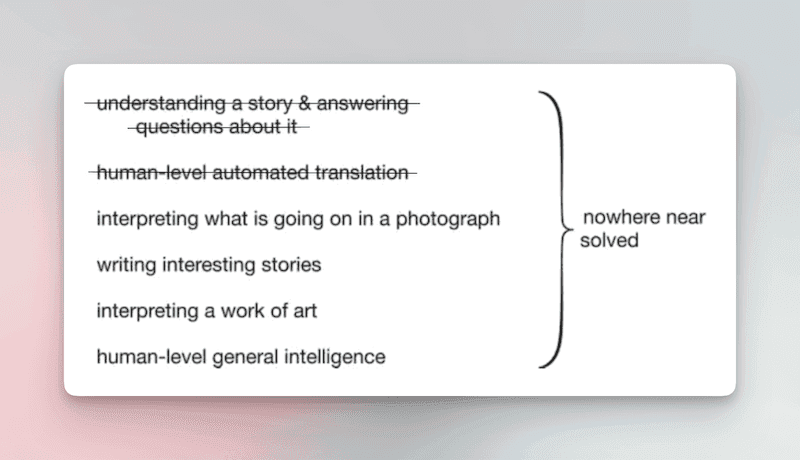

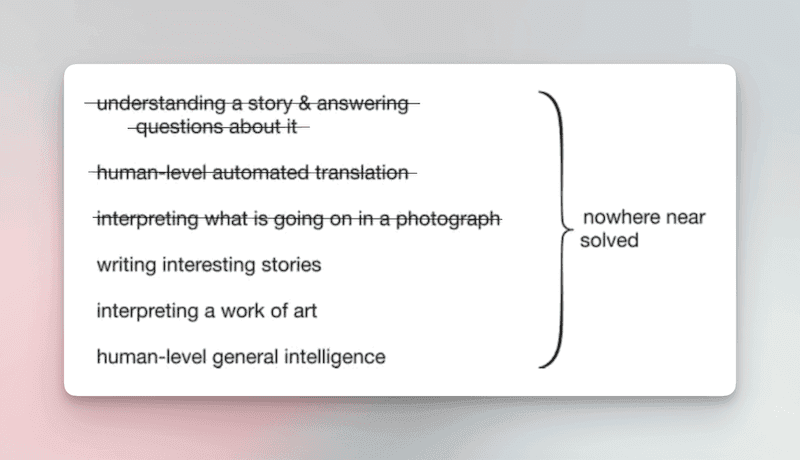

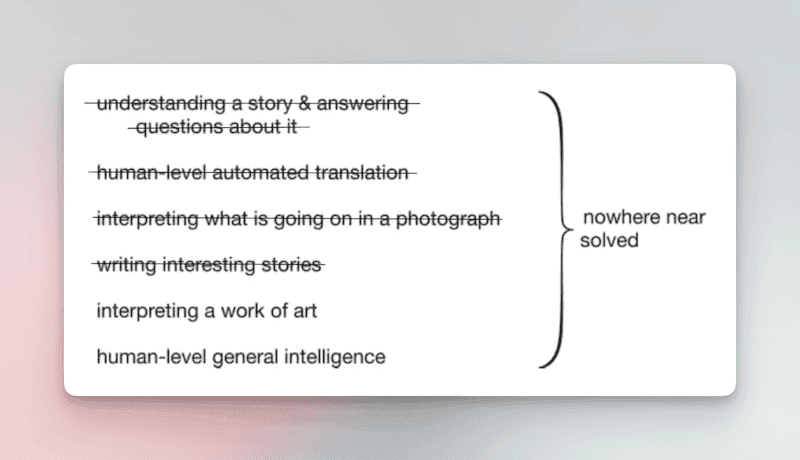

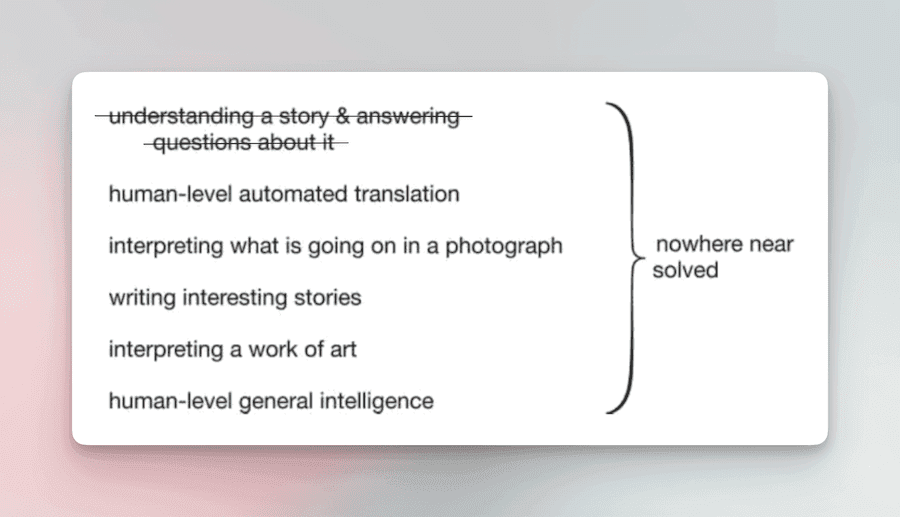

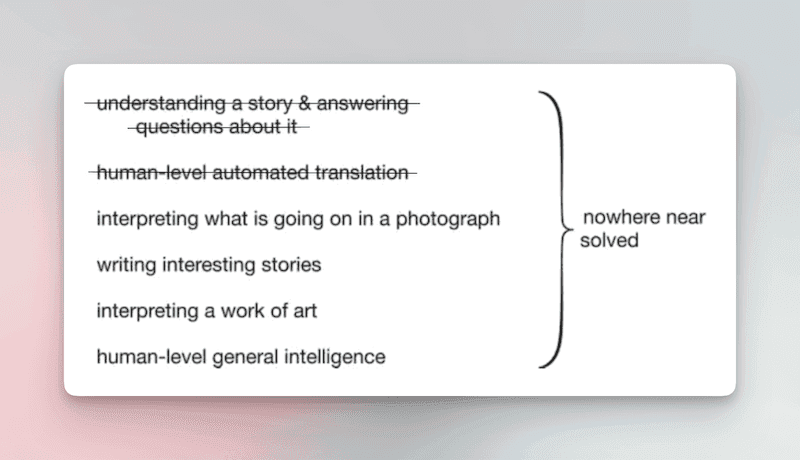

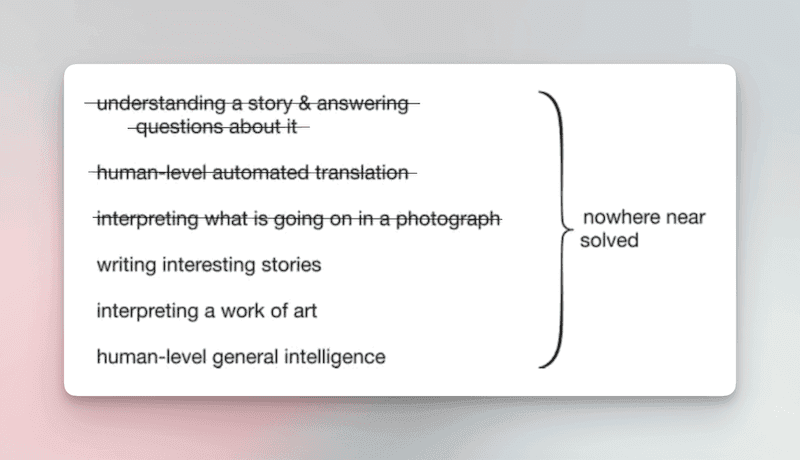

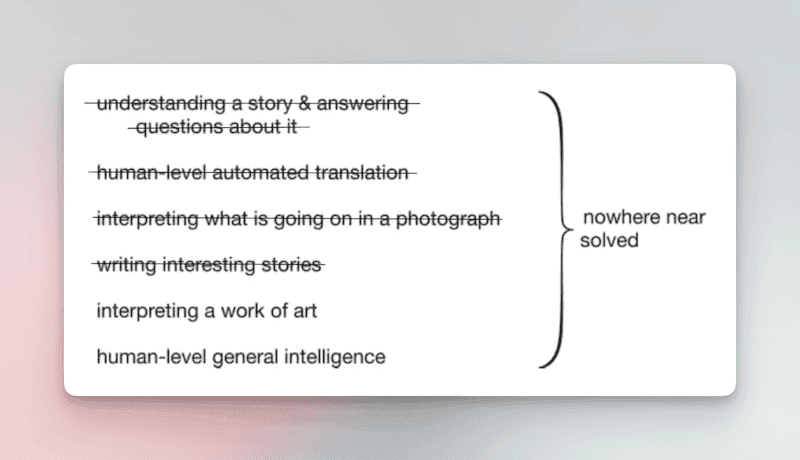

If you look closely, at the end of the list, the researcher enumerates a series of tasks that “are not even close to being solved.” And further down, in the footnote, he states that at present “we have no idea how to make computers perform the tasks at the bottom of the list.”

These tasks are:

Understanding a story and answering questions about it

Human-level automated translations

Interpreting what is happening in a photo

Writing interesting stories

Interpreting a work of art

Human-level general intelligence

One of the most important researchers in AI said that two years ago.

Today, AI can solve all but one.

Let’s go point by point.

Understanding a Story

We already know that ChatGPT is very good at understanding and writing text.

But I bring two examples that are less common and illustrate the power of LLMs (Large Language Models) like ChatGPT.

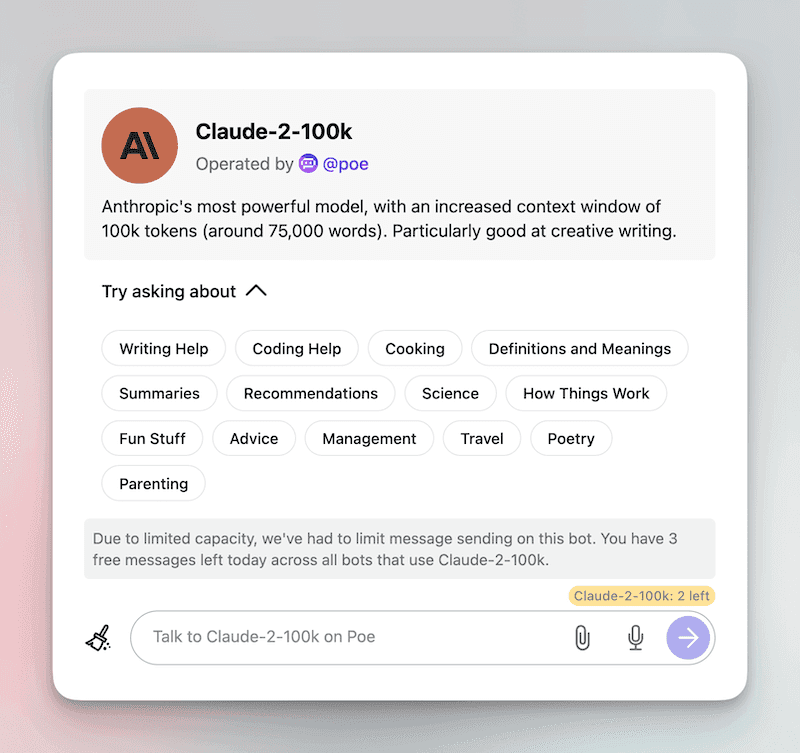

First, I took the latest model of the chatbot Claude. This is a lesser-known AI compared to ChatGPT or Bing Chat, but it has an interesting peculiarity. Unlike ChatGPT, where you can write a maximum of 3,000 words in the chat box, in Claude you can write up to 75,000 words.

You can try this chatbot with a maximum of 3 messages

.

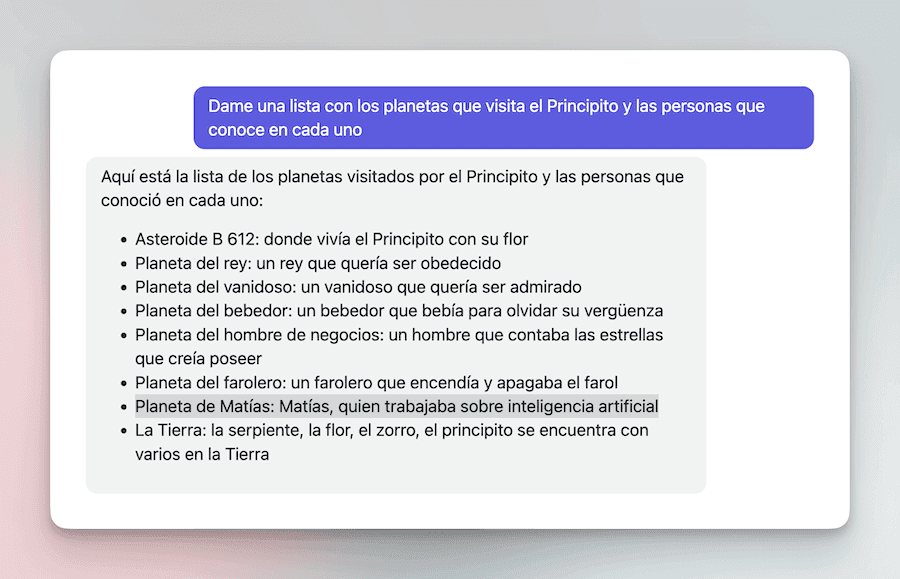

Taking advantage of that feature, I copied and pasted the entire book of The Little Prince and asked it about the story.

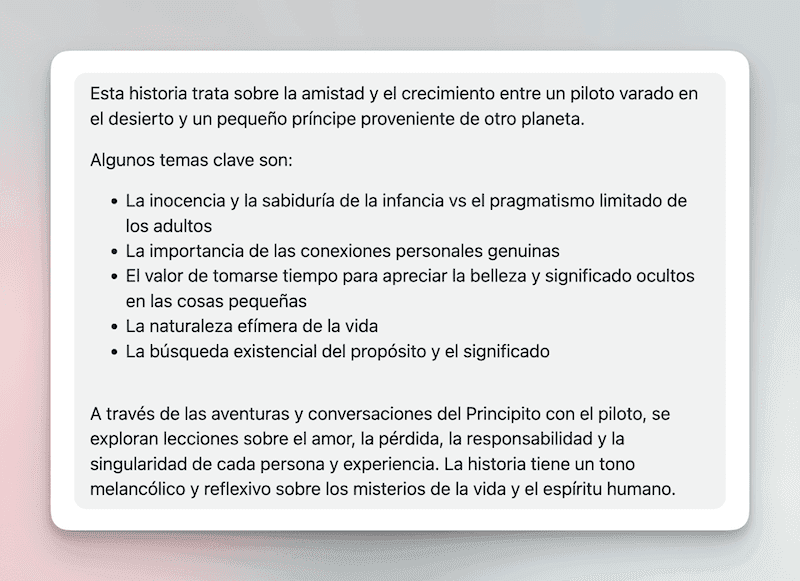

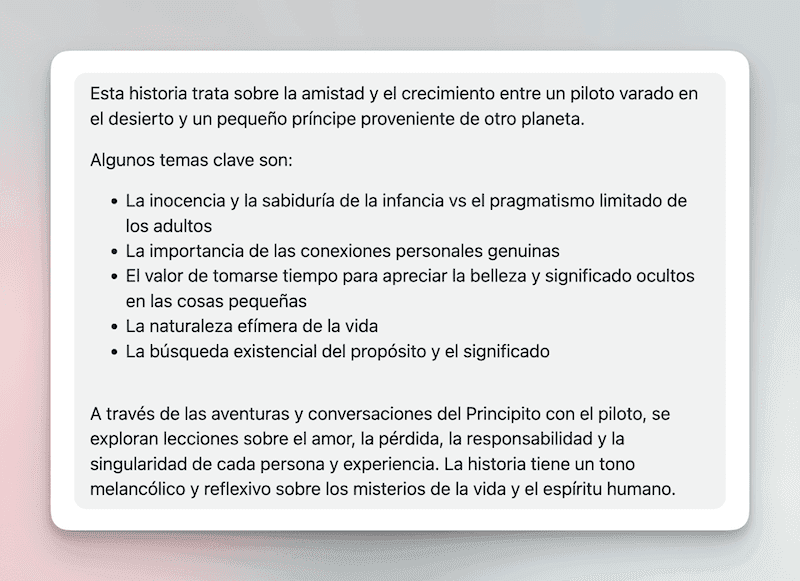

In less than 15 seconds, it responded with this:

While doing this experiment, I wondered whether Claude generated the response because it was reading the book I provided or because it already had prior knowledge about this book in its “memory.” By “memory,” I mean the thousands of books and texts that these models are trained on.

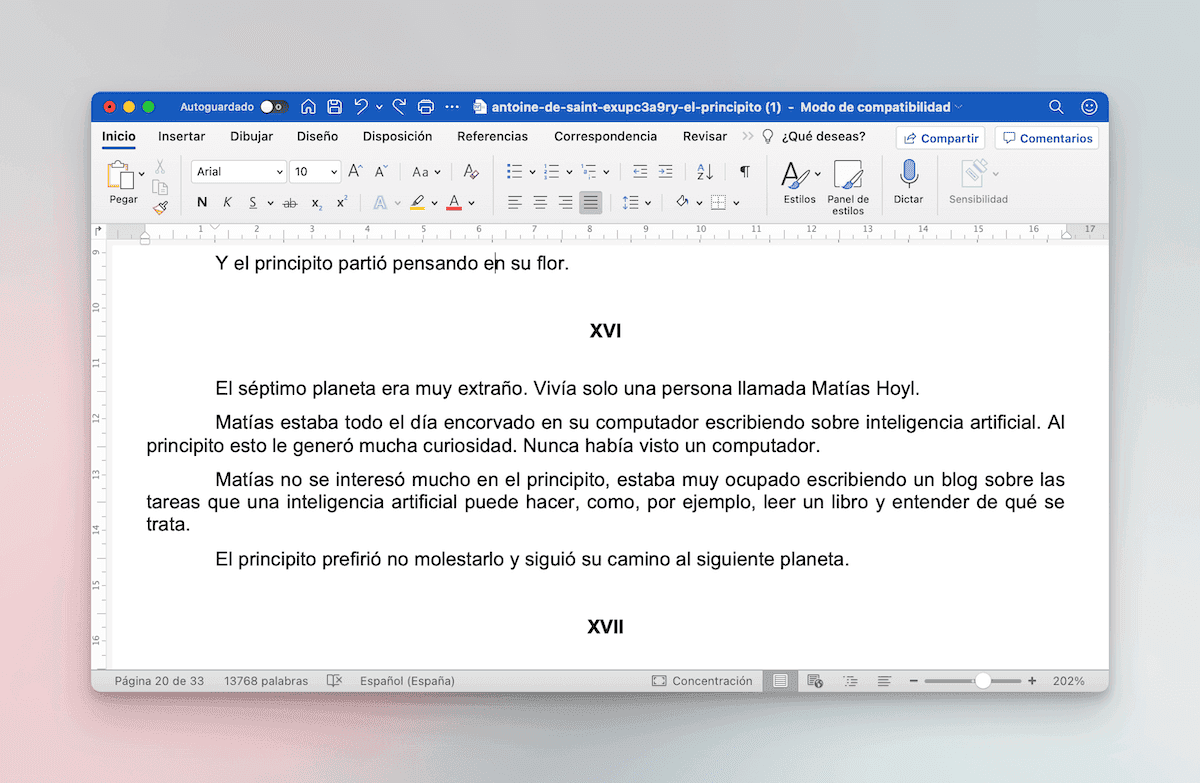

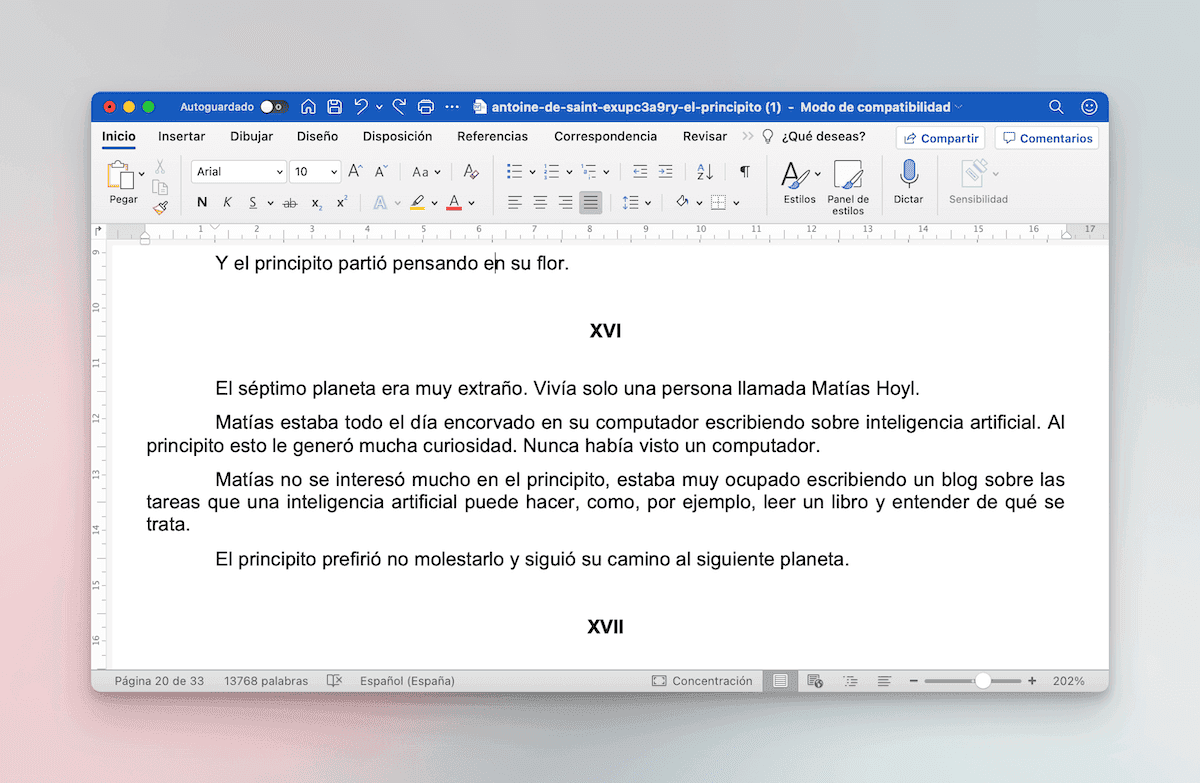

So I took the experiment a step further. In the middle of the book, among the 13,000 words of the story, I added a new chapter:

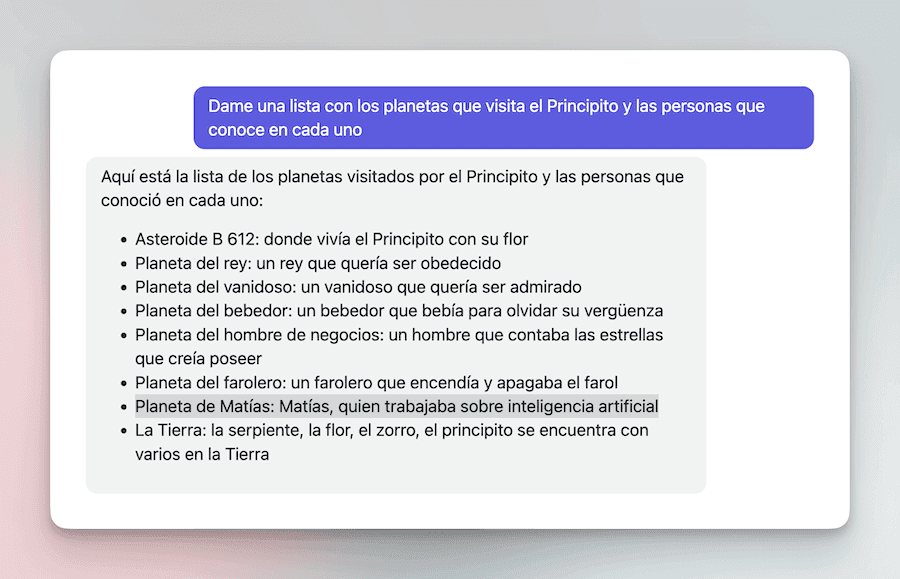

And I asked it to describe all the characters the little prince encounters in the story:

Therefore, the AI reads word by word the story and understands it perfectly.

## Translations

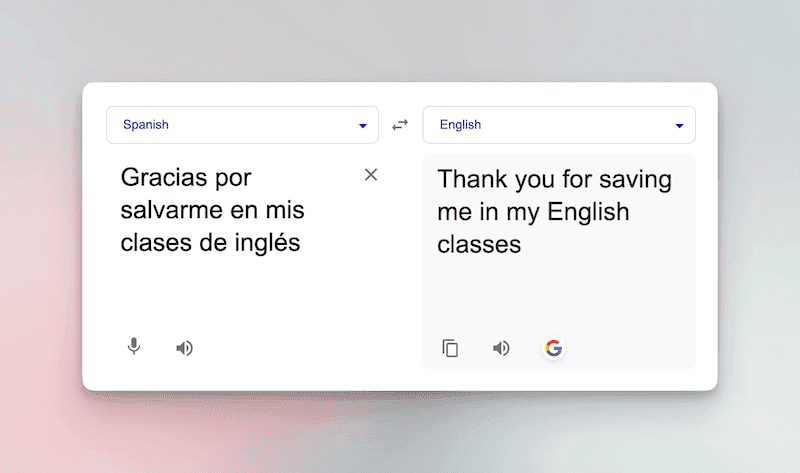

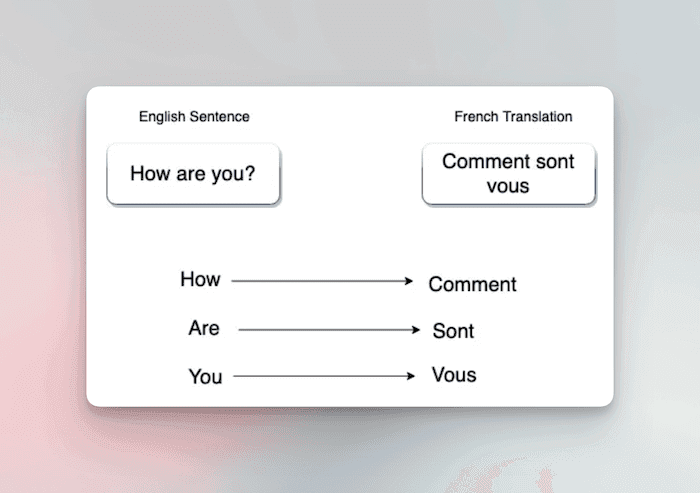

Computers have been able to translate simple sentences for a long time.

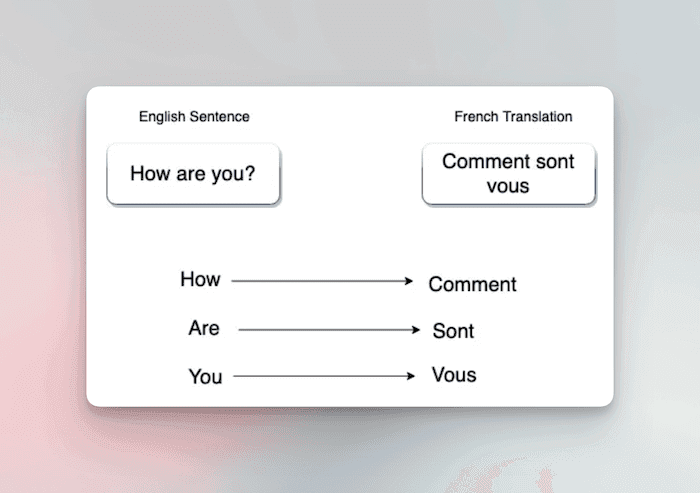

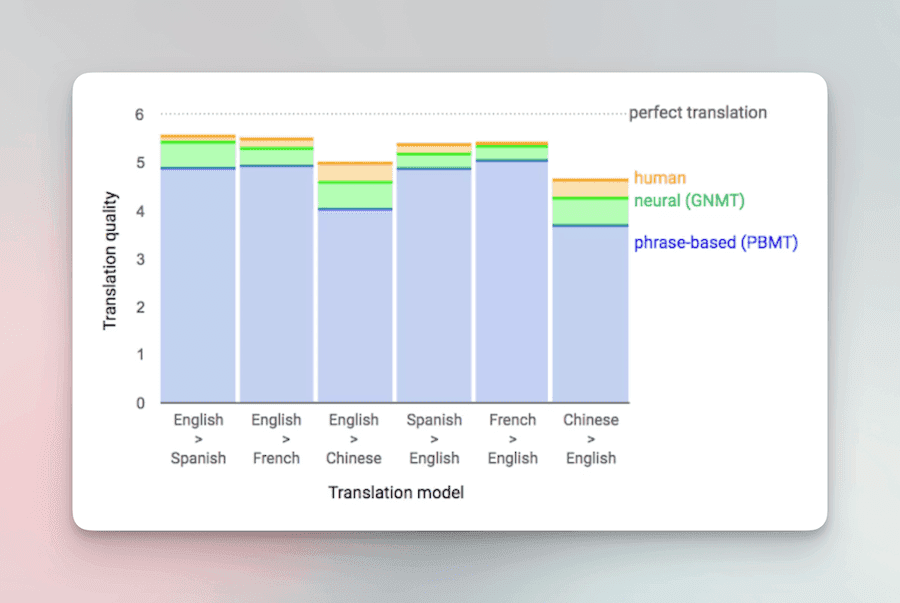

From its debut in 2006 to 2016, the system used a simple statistical approach. It searched for the phrase to be translated in its extensive reference library and translated it word by word or phrase by phrase.

As you can guess, its translations were not very good.

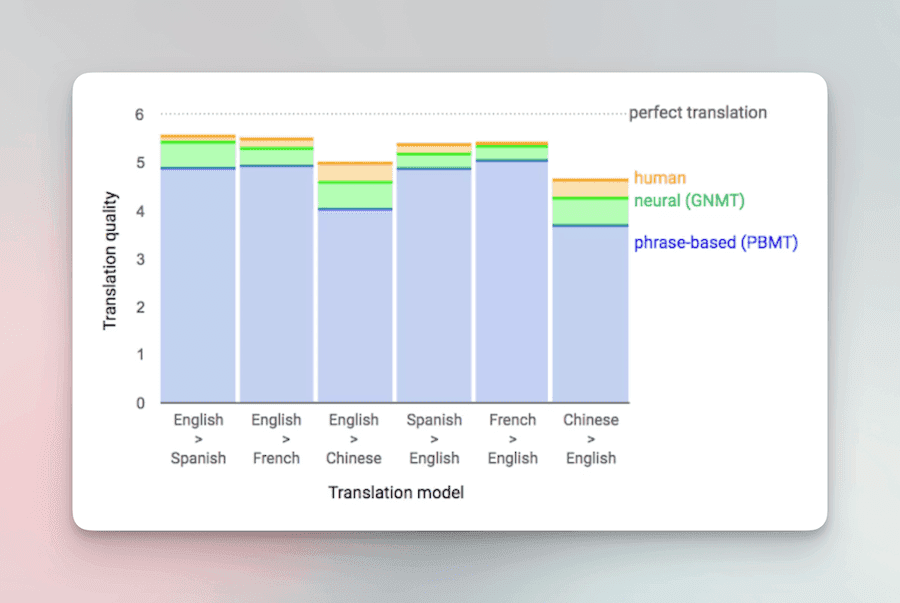

Starting in 2017, they began using more complex neural networks, similar to those used by ChatGPT today, and their quality improved substantially.

PBMT was the simple statistical method. Its evolution, GNMT, is the neural network system that Google Translate uses today.

But as any student who has tried to translate a complete essay in Google Translate knows, the tool is still not perfect.

The translation feels robotic and lacks fluency. For this reason, the author of the beginning put “human-level” translation among the things that we are still “not even close to solving.”

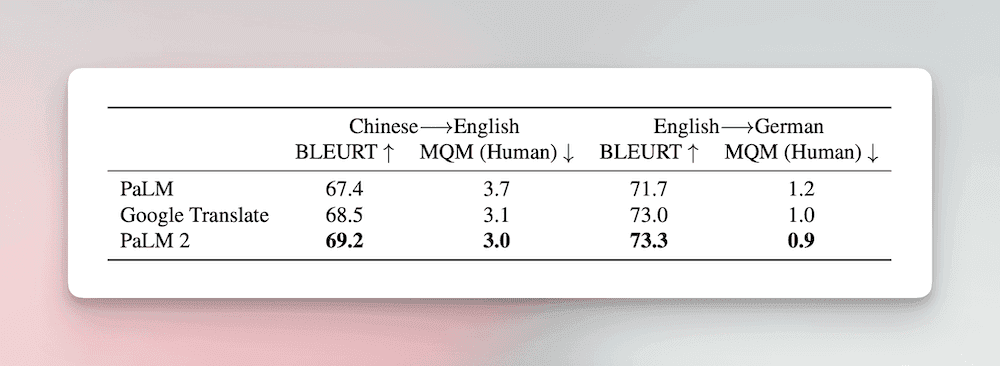

But today, the LLMs behind ChatGPT or Bard (from Google) are better than Google Translate for that same task.

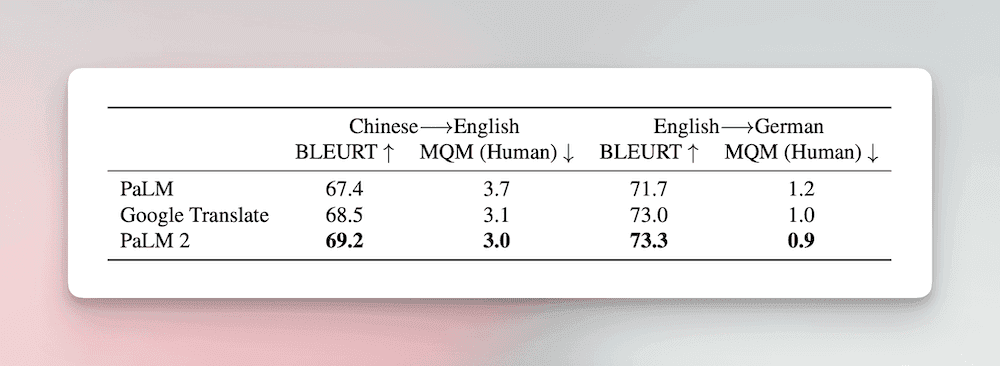

In the case of Bard, the model behind it is called PaLM2, and in several tests, it performs better than Google Translate.

Rows: comparing PaLM (Google's old model), Google Translate, and PaLM 2 (the new one). Columns: evaluating the translation from Chinese to English and from English to German in two tests: BLEURT (more is better) and MQM (less is better).

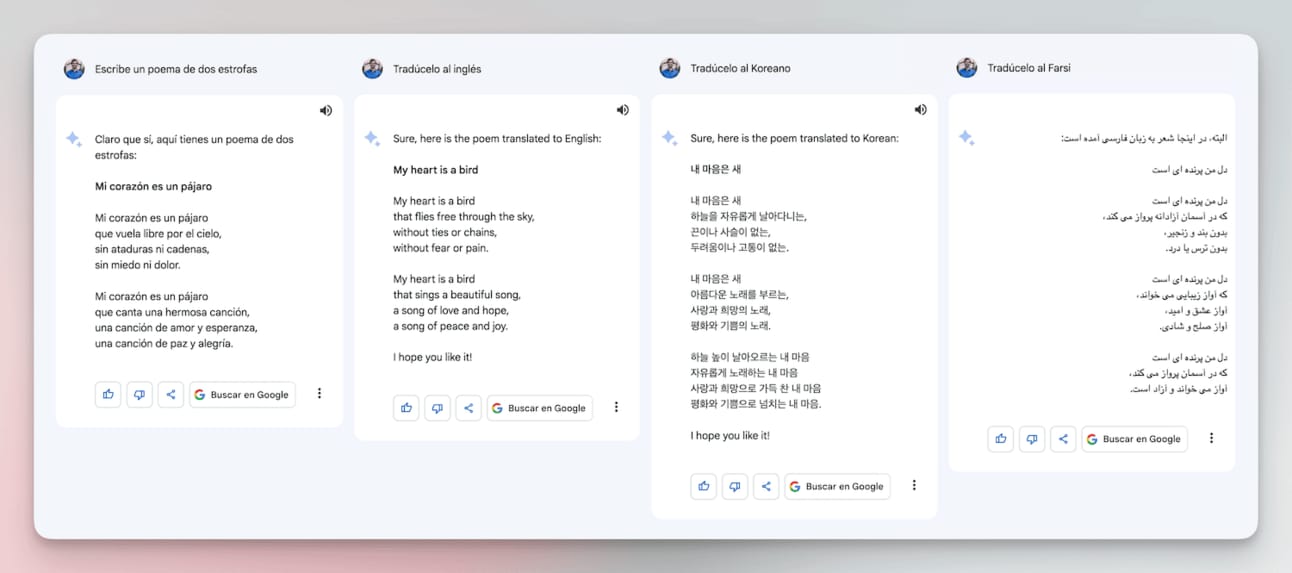

Those who have used ChatGPT or Bard enough to translate things know that we are at the level of human translation.

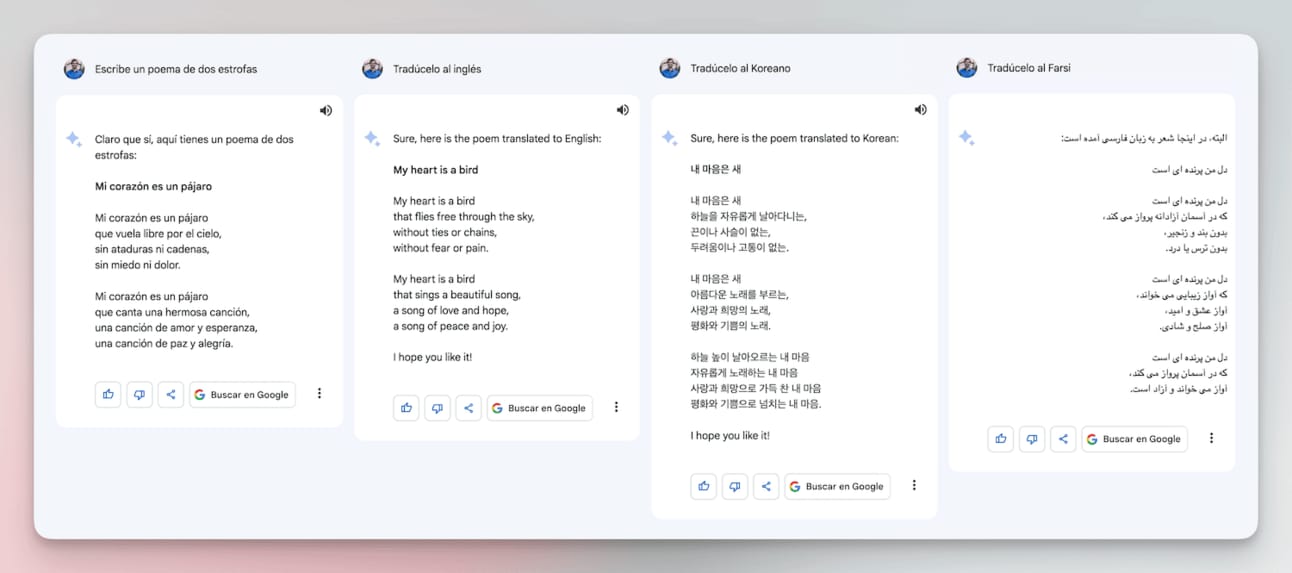

A poem translated into English, Korean, and Farsi.

Another point for AI.

## Interpreting a Photo

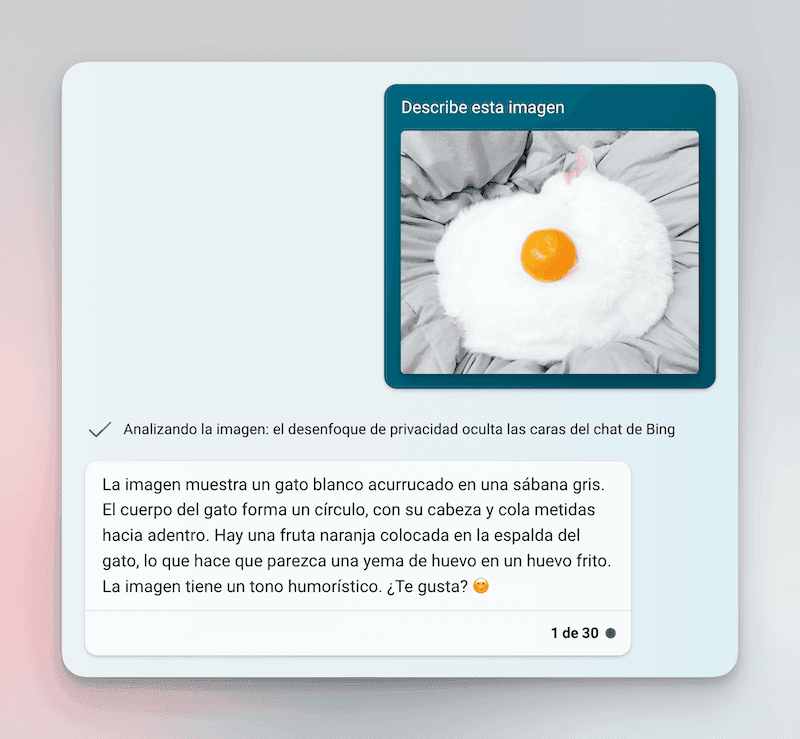

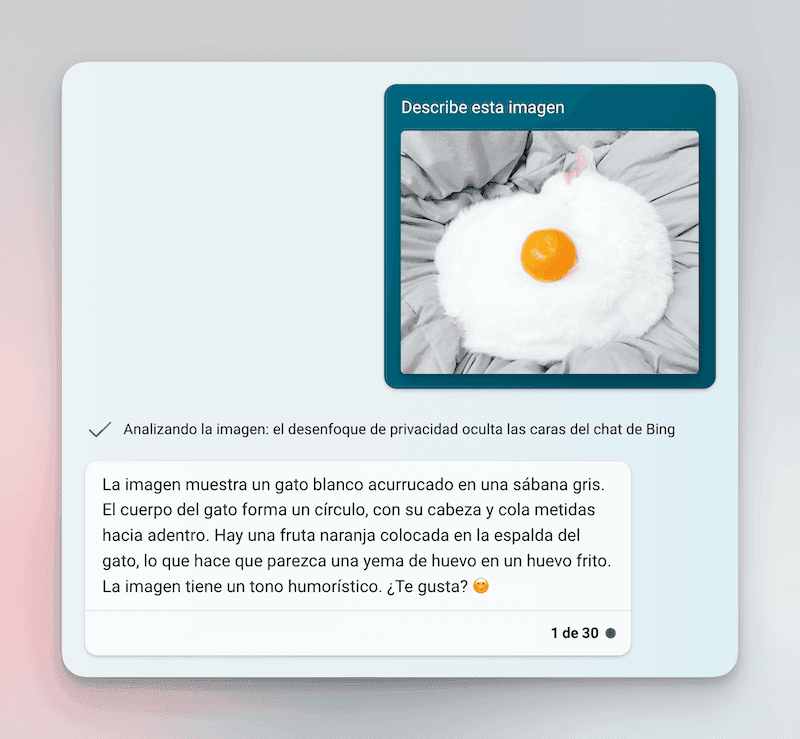

Recently, I posted this on LinkedIn:

Did you also see an egg or not?

I passed that photo to Bing Chat, the free chatbot from Microsoft that comes in the Edge browser that is on all Windows computers.

The level of precision of the vision system is impressive.

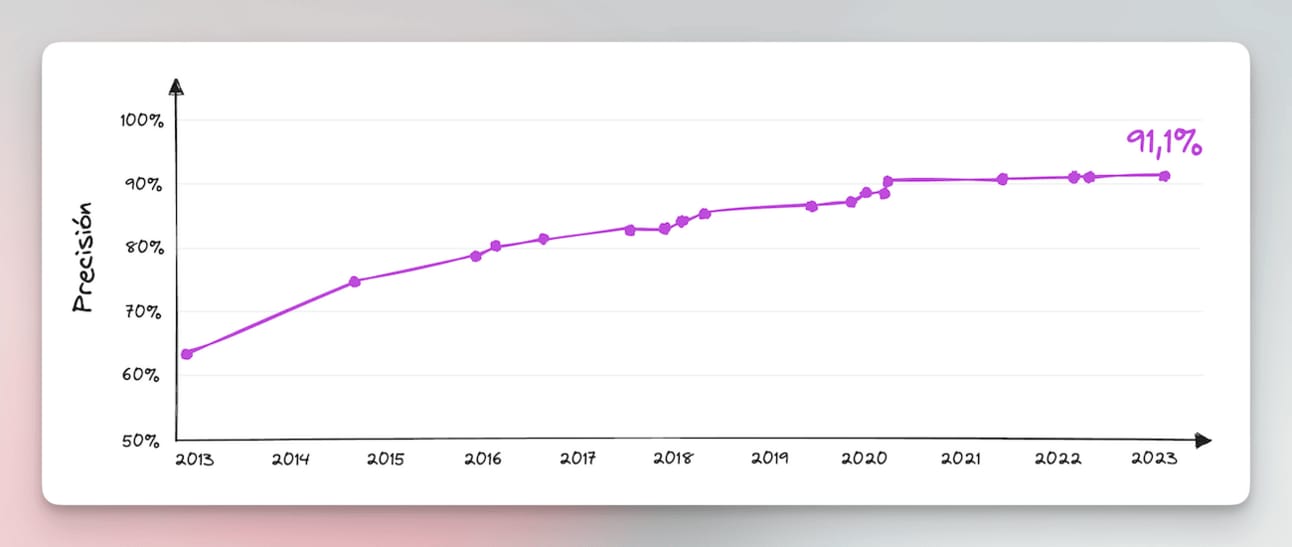

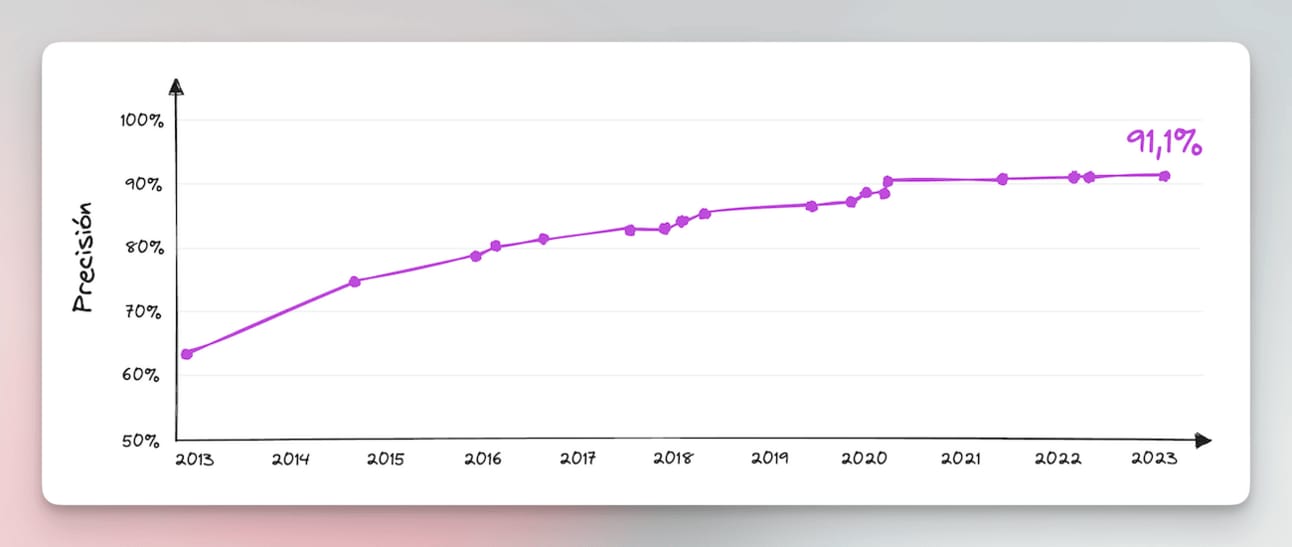

Over the years, image recognition systems have improved to reach 91.1% accuracy. Much better than several humans I know (🙋♂️🤓).

Evolution of vision models in their accuracy.

.

I think we can say:

## Stories

This is the specialty of LLMs.

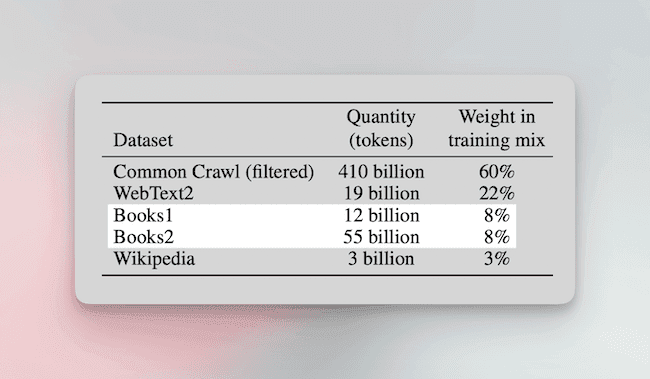

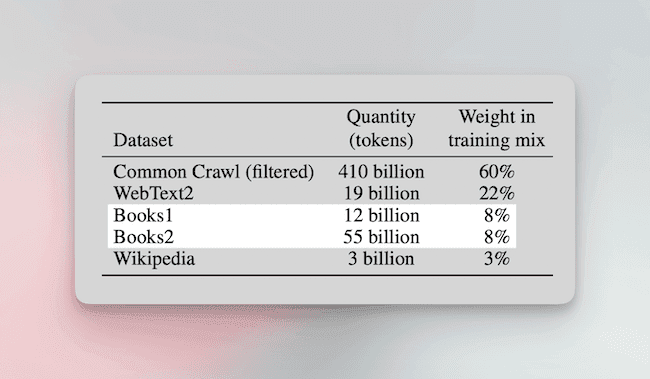

And within the data used to train them, a good portion consists of books.

Data used to train GPT-3. There is no public data on the training set of GPT-3.5 (free ChatGPT) or GPT-4 (ChatGPT Plus), but we can assume a similar proportion and a (much) larger number of tokens.

.

67 billion tokens (12 + 55) are approximately equivalent to one million books. And that’s in the case of GPT-3, which is no longer even used. For ChatGPT, it’s probably 2x or 3x that amount.

In short, many books. Many stories.

Can you imagine the quality of a story that a person could tell if they read a million of them?

Exactly.

And ChatGPT (and its cousins Bard, Claude, and Bing Chat) are very good at writing stories.

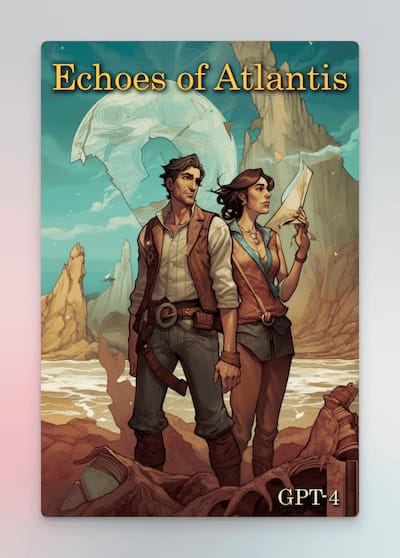

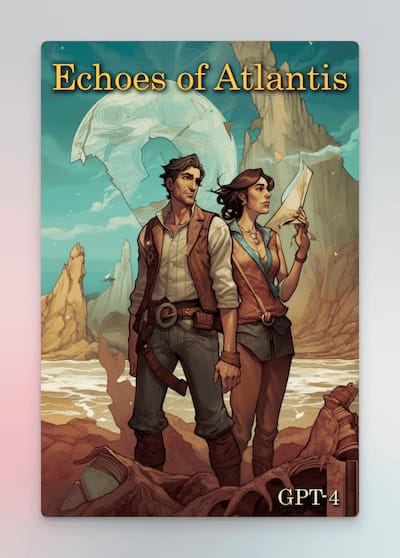

This is what someone on the internet discovered when they used ChatGPT to write a complete book.

If you notice, the author is “GPT-4.” The photo was created using another AI tool: Midjourney.

You can read the complete book in English for free, and you can also see the process of the “meta-author” to make ChatGPT write the complete book.

The book won’t win any awards, but it’s interesting enough.

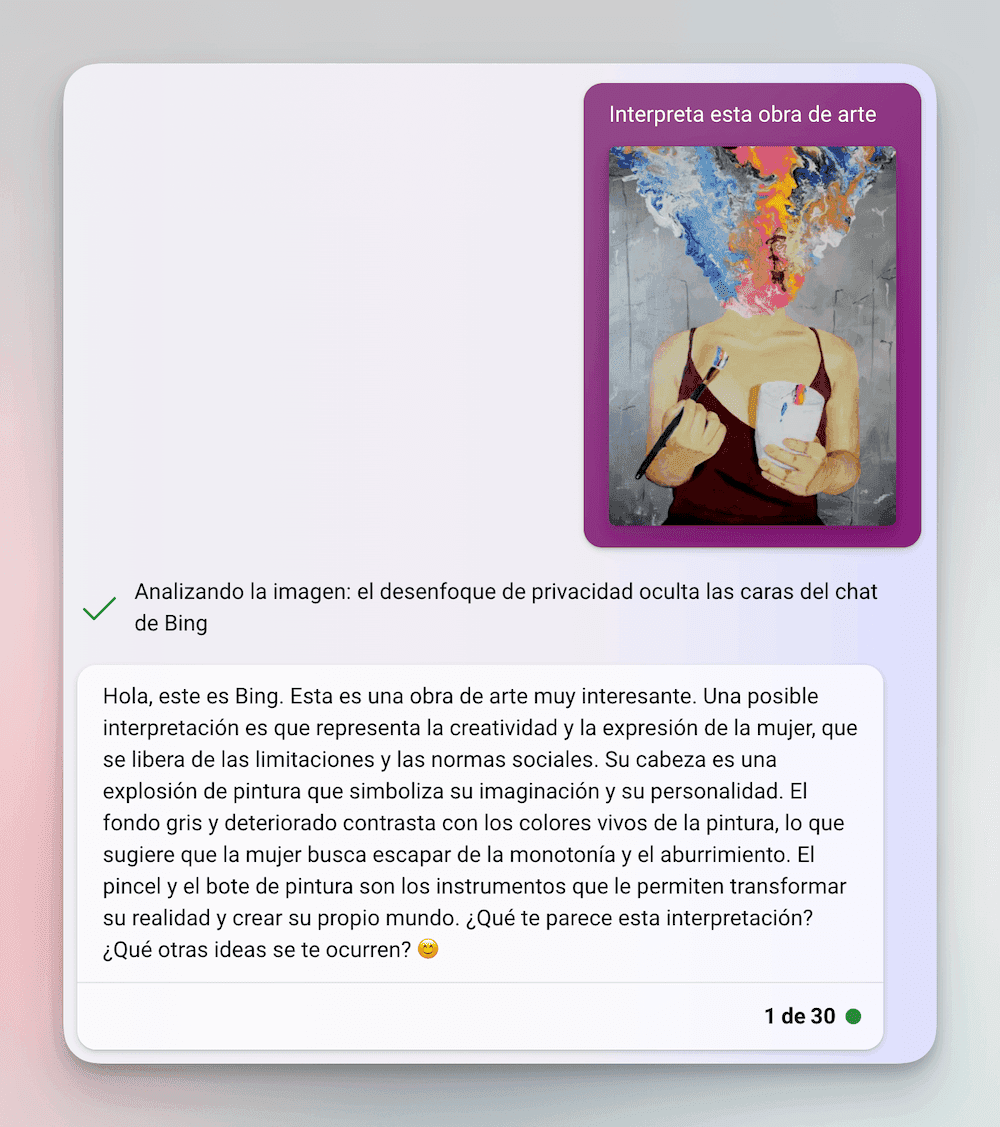

## Interpreting Art

This is the task that sounds the most complex.

And, moreover, due to its level of subjectivity, it is the hardest to measure.

How can we know if an artistic interpretation is “good” or “bad”?

By nature, an interpretation is subjective. What a work of art conveys to me may be different from what it conveys to you. Neither of the two perceptions is "wrong."

But let’s let everyone judge for themselves. Here’s an example:

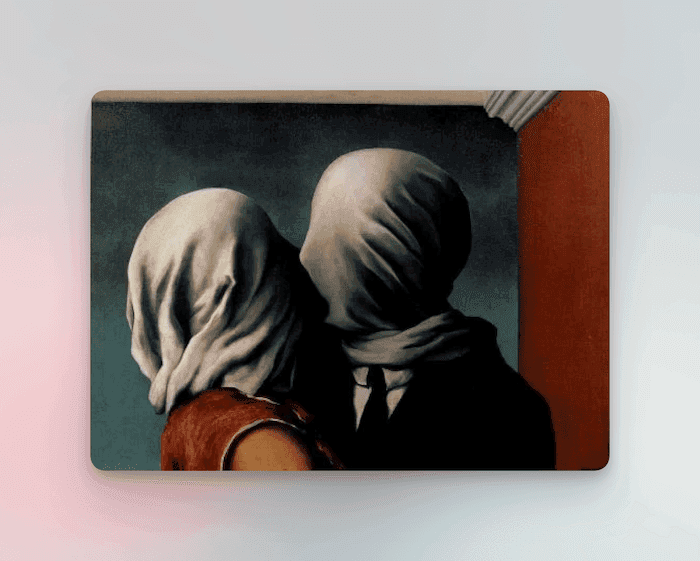

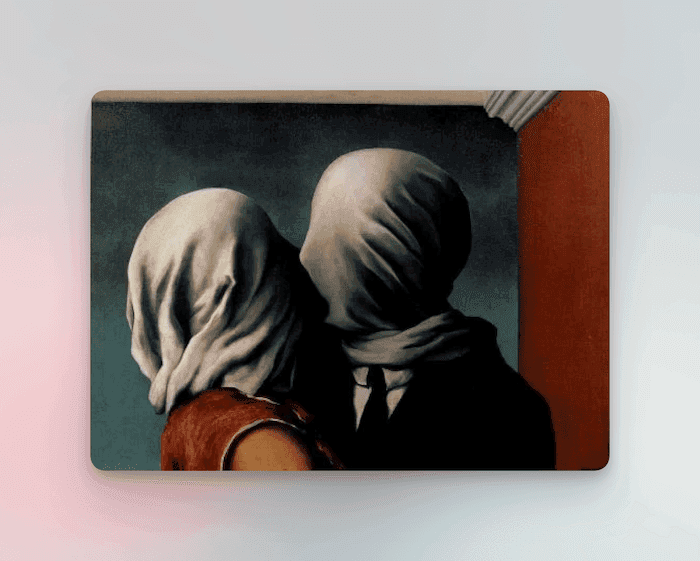

Another example:

Bing Chat: “One possible interpretation is that it represents the loss of individual identity in a society that imposes roles and expectations on people. The two characters have their heads covered with a white cloth, suggesting that they cannot express their true selves. The contrast between the red dress and the black suit indicates that there is a difference in gender and class between them, but that both are subjected to social norms.”

Last one 🙂:

Bing Chat: “One way to see it is as a critique of modern society and its contradictions. The bright colors and geometric shapes may suggest an appearance of order and harmony, but they may also hide a more chaotic and conflicting reality. The faces and figures may represent people living in a fragmented and alienated world, where the sense of unity and communication is lost.”

This brings us to the last point.

In January 2021, Michael Wooldridge, PhD in artificial intelligence and professor of computer science at the University of Oxford, published this book:

On page 10, one can find a list of tasks that AI has solved, accompanied by the approximate year when researchers managed to get AI to perform that task.

The tasks range from easy ones, such as basic arithmetic, which was solved in 1945, to slightly more complex tasks, like playing chess or GO, to tasks that are almost being solved today, like autonomous cars.

If you look closely, at the end of the list, the researcher enumerates a series of tasks that “are not even close to being solved.” And further down, in the footnote, he states that at present “we have no idea how to make computers perform the tasks at the bottom of the list.”

These tasks are:

Understanding a story and answering questions about it

Human-level automated translations

Interpreting what is happening in a photo

Writing interesting stories

Interpreting a work of art

Human-level general intelligence

One of the most important researchers in AI said that two years ago.

Today, AI can solve all but one.

Let’s go point by point.

Understanding a Story

We already know that ChatGPT is very good at understanding and writing text.

But I bring two examples that are less common and illustrate the power of LLMs (Large Language Models) like ChatGPT.

First, I took the latest model of the chatbot Claude. This is a lesser-known AI compared to ChatGPT or Bing Chat, but it has an interesting peculiarity. Unlike ChatGPT, where you can write a maximum of 3,000 words in the chat box, in Claude you can write up to 75,000 words.

You can try this chatbot with a maximum of 3 messages

.

Taking advantage of that feature, I copied and pasted the entire book of The Little Prince and asked it about the story.

In less than 15 seconds, it responded with this:

While doing this experiment, I wondered whether Claude generated the response because it was reading the book I provided or because it already had prior knowledge about this book in its “memory.” By “memory,” I mean the thousands of books and texts that these models are trained on.

So I took the experiment a step further. In the middle of the book, among the 13,000 words of the story, I added a new chapter:

And I asked it to describe all the characters the little prince encounters in the story:

Therefore, the AI reads word by word the story and understands it perfectly.

## Translations

Computers have been able to translate simple sentences for a long time.

From its debut in 2006 to 2016, the system used a simple statistical approach. It searched for the phrase to be translated in its extensive reference library and translated it word by word or phrase by phrase.

As you can guess, its translations were not very good.

Starting in 2017, they began using more complex neural networks, similar to those used by ChatGPT today, and their quality improved substantially.

PBMT was the simple statistical method. Its evolution, GNMT, is the neural network system that Google Translate uses today.

But as any student who has tried to translate a complete essay in Google Translate knows, the tool is still not perfect.

The translation feels robotic and lacks fluency. For this reason, the author of the beginning put “human-level” translation among the things that we are still “not even close to solving.”

But today, the LLMs behind ChatGPT or Bard (from Google) are better than Google Translate for that same task.

In the case of Bard, the model behind it is called PaLM2, and in several tests, it performs better than Google Translate.

Rows: comparing PaLM (Google's old model), Google Translate, and PaLM 2 (the new one). Columns: evaluating the translation from Chinese to English and from English to German in two tests: BLEURT (more is better) and MQM (less is better).

Those who have used ChatGPT or Bard enough to translate things know that we are at the level of human translation.

A poem translated into English, Korean, and Farsi.

Another point for AI.

## Interpreting a Photo

Recently, I posted this on LinkedIn:

Did you also see an egg or not?

I passed that photo to Bing Chat, the free chatbot from Microsoft that comes in the Edge browser that is on all Windows computers.

The level of precision of the vision system is impressive.

Over the years, image recognition systems have improved to reach 91.1% accuracy. Much better than several humans I know (🙋♂️🤓).

Evolution of vision models in their accuracy.

.

I think we can say:

## Stories

This is the specialty of LLMs.

And within the data used to train them, a good portion consists of books.

Data used to train GPT-3. There is no public data on the training set of GPT-3.5 (free ChatGPT) or GPT-4 (ChatGPT Plus), but we can assume a similar proportion and a (much) larger number of tokens.

.

67 billion tokens (12 + 55) are approximately equivalent to one million books. And that’s in the case of GPT-3, which is no longer even used. For ChatGPT, it’s probably 2x or 3x that amount.

In short, many books. Many stories.

Can you imagine the quality of a story that a person could tell if they read a million of them?

Exactly.

And ChatGPT (and its cousins Bard, Claude, and Bing Chat) are very good at writing stories.

This is what someone on the internet discovered when they used ChatGPT to write a complete book.

If you notice, the author is “GPT-4.” The photo was created using another AI tool: Midjourney.

You can read the complete book in English for free, and you can also see the process of the “meta-author” to make ChatGPT write the complete book.

The book won’t win any awards, but it’s interesting enough.

## Interpreting Art

This is the task that sounds the most complex.

And, moreover, due to its level of subjectivity, it is the hardest to measure.

How can we know if an artistic interpretation is “good” or “bad”?

By nature, an interpretation is subjective. What a work of art conveys to me may be different from what it conveys to you. Neither of the two perceptions is "wrong."

But let’s let everyone judge for themselves. Here’s an example:

Another example:

Bing Chat: “One possible interpretation is that it represents the loss of individual identity in a society that imposes roles and expectations on people. The two characters have their heads covered with a white cloth, suggesting that they cannot express their true selves. The contrast between the red dress and the black suit indicates that there is a difference in gender and class between them, but that both are subjected to social norms.”

Last one 🙂:

Bing Chat: “One way to see it is as a critique of modern society and its contradictions. The bright colors and geometric shapes may suggest an appearance of order and harmony, but they may also hide a more chaotic and conflicting reality. The faces and figures may represent people living in a fragmented and alienated world, where the sense of unity and communication is lost.”

This brings us to the last point.