🧩🚀 Micro-decisions produce macro-results

Often, individual decisions, made without any ill intent, lead to unexpected social outcomes.

To explain this, let me tell you the story of a world where there are only blue squares and yellow triangles.

Hello :)

These shapes enjoy living in diversity. In fact, when they are only surrounded by figures like themselves, they are not entirely happy.

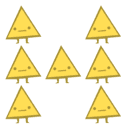

A triangle surrounded by other triangles: boring.

When they find themselves in a marked minority in their neighborhoods, they seek to move. In fact, they have a rule: "I will move if less than one-third (33%) of my neighbors are like me."

It has 6 neighbors and only 1/6 are on its side. No wonder the triangle is upset.

But if they find a place where the rule is met, they stay happily.

Living in harmony (2/6=33% are like it)

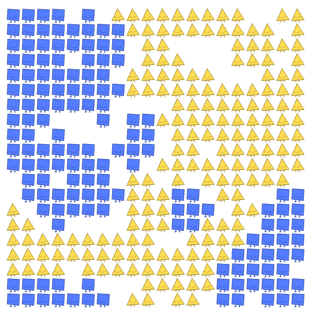

What happens if we form a society with these polygons? I imagine their diversity rule will create neighborhoods with enough mix, right?

We start with a completely random board, and the figures move following the 33% rule until they are happy.

The truth is that it does affect. A lot. What results is social segregation (figurative segregation in this case?).

Even though each figure individually has a small bias that should go unnoticed, when it interacts with the bias of others, it creates a social equilibrium that deviates from the diversity we would expect.

In other words, a small individual bias can lead to a large collective bias. And this increases non-linearly as individual bias grows.

Final result increasing the bias from 33% to 50%.

No figure is bad. In fact, they are much more open to diversity than one might be. And each is making a very sensible decision. But that micro-decision generates unexpected macro-results.

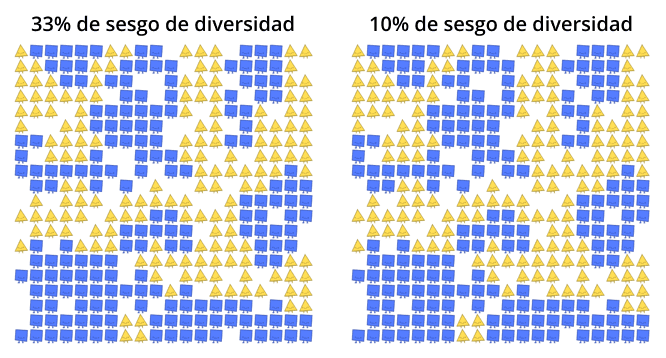

There is an additional problem. When we start with a segregated configuration, it doesn't matter if we lower the diversity bias from 50% to 30%, or to 10%. Once the equilibrium condition is reached, the figures have no incentive to move.

Yes. They are exactly the same. There is no movement because all the figures are "happy" with the current configuration.

The only way to break the status quo is for some figures to start demanding a minimum of diversity.

If we slightly change the rule to "I will move if less than 33% or more than 90% of my neighbors are like me." The figures no longer only move if they are a minority (less than a third) but also don't like their neighborhood if they are an extreme majority (they demand at least 10% of figures from the other side).

What happens in that case?

It starts with a 33% segregated configuration, but by demanding at least 10% diversity, movement is generated.

What's the moral? I take away at least three lessons:

No one is inherently good or bad. We are all making decisions that make sense individually. We shouldn't judge people or societies too quickly. Things are always more complex than they seem.

It only takes a small individual bias ("I move if less than 33% of my neighbors are like me") to generate marked social outcomes. This invites me to look at my own biases and evaluate them critically.

Similarly, it takes even less individual impetus ("I move if less than 10% of my neighbors are from the other side") to change entire societies. This motivates me to seek spaces where a small change in attitude can generate positive macro results.

PS: All credits to this website for the square and triangle simulations. It's worth visiting and playing with the simulators.